Today there was a session at Siggraph conference in Boston, where Microsoft Live Labs held a sneak preview of Photosynth. In short, it’s about assembling a lot of digital photos and then applying algorithms to extract distinctive features and link these together in a big model, by calculating 3D positions from adjacent images.

The exact algorithm is being kept secret, but I would guess they use something like SIFT (Scale Invariant Feature Transform) to detect image features regardless of orientation and then create a point cloud from camera rays sweeping the scene.

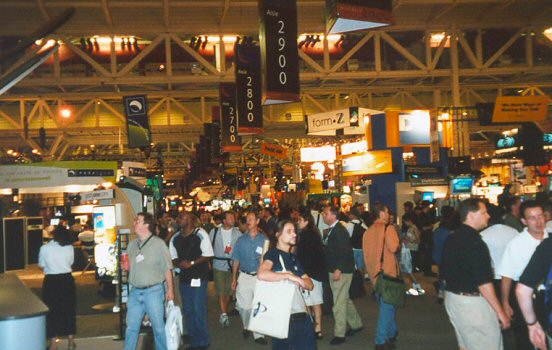

Attendees at Siggraph conference in New Orleans 2000.

Attendees at Siggraph conference in New Orleans 2000.

The technology looks nice, but I suppose quite a lot of photos are needed to make it look good. Also, how will the algorithm work with photos from different batches? Their example photos are taken at the same time, but what if some are taken in daylight, some during the night and some with distortions such as tourists posing in front of the pictures?

If this technology is made available on a site where everyone can upload their own images, I hope the photos are approved before insertion or else it will all collapse as people add silly stuff. What will happen if all photos of the world are inserted in Photosynth and then linked with Google Earth?

Nevertheless, it still is an interesting idea with many applications.

Related posts

2 comments

Leave a reply